In recent times it seems that a corporate scandal is now an everyday occurrence and something which is far too frequent. The causes of a corporate scandal are also far too predictable: failures in corporate governance, poor risk management, compliance failures, unreliable intelligence, inadequate security, insufficient resilience, ineffective controls, and failures by assurance providers.

A forensic post-mortem investigation into the cause of any corporate scandal or failure will identify a number (or perhaps all) of these deficiencies and weaknesses. But what if we could do a “pre-mortem” investigation? What if we could predict the scandal in advance and head it off by considering all the ways things could go wrong?

Artificial Intelligence is the latest buzz among internal audit departments, and for good reason: It has the potential to completely transform internal audit as it does for many corporate functions. But there is also a downside in the potential for massive risks that stem from the use of AI. It’s not hard to imagine that these AI risks will come to pass at one or more organizations and blow up into the latest scandal of epic proportions.

Artificial Intelligence technology as it evolves is certain to contribute to the creation, preservation, and destruction of stakeholder value in the coming weeks, months, and years. In terms of value creation, digital and smart technologies are already pervasive and AI in its many forms, such as machine learning, natural language processing, and computer vision, has the potential to leverage from this in order to add significant value, to make enormous contributions, and to create long-term positive impacts for society, the economy, and the environment.

Artificial Intelligence technology as it evolves is certain to contribute to the creation, preservation, and destruction of stakeholder value in the coming weeks, months, and years. In terms of value creation, digital and smart technologies are already pervasive and AI in its many forms, such as machine learning, natural language processing, and computer vision, has the potential to leverage from this in order to add significant value, to make enormous contributions, and to create long-term positive impacts for society, the economy, and the environment.

It has the potential to solve complex problems and create opportunities that benefit all human beings and their ecosystems. Unfortunately, AI systems also have the potential for tremendous value destruction, and to cause an unimaginable level of harm and damage to human ecosystems, including business, society, and the planet.

Given the deficiencies and weaknesses described above in relation to everyday corporate scandals, one does not have to be a rocket scientist to predict that these same issues are also likely to arise in relation to AI technology. It is therefore incumbent upon our leaders to consider the potential serious impact, consequences, and repercussions which could emerge in relation to the development, deployment, use, and management of AI systems.

Anticipation of Future AI Hazards

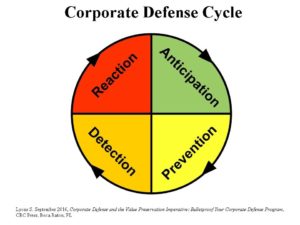

An AI defense cycle can be viewed in terms of the corporate defense cycle, with the same unifying defense objectives representing the four cornerstones of a robust AI defense program.

Prudence and common-sense would suggest that it is therefore considered both logical and rational to anticipate the following deficiencies and weaknesses in relation to AI technology and to fully consider their potential for value destruction.

1. Failures in AI Governance

The current lack of a single comprehensive global AI governance framework has already led to inconsistencies and differences in approaches across various jurisdictions and regions. This is likely to result in potential conflicts between stakeholder groups with different priorities. The lack of a unified approach to AI governance can result in a lack of transparency, responsibility, and accountability which raises serious concerns about the social, moral, and ethical development and use of AI technologies. The ever-increasing lack of human oversight due to the development of autonomous AI systems simply reinforces these growing concerns. Prevailing planet governance issues are also likely to negatively impact on AI governance.

2. Poor AI Risk Management

Currently there appears to also be a fragmented global approach to AI risk management. Some suggest that this approach seems to overemphasize a focus on risk detection and reaction and underemphasize a focus on risk anticipation and prevention. It can tend to focus on addressing very specific risks (such as bias, privacy, security, and others) without giving due consideration to the broader systemic implications of AI development and its use.

Such a narrow focus on AI risks also fails to address the broader societal and economic impacts of AI and overlooks the interconnectedness of AI risks and their potential long-term consequences. Such short-sightedness is potentially very dangerous as it fails to address and keep pace with the potential damage of emerging risks while also failing to prepare for already flagged longer-term risks such as those posed by superintelligence or autonomous weapons systems and other potentially catastrophic outcomes.

3. AI Compliance Failures

AI compliance consists of a patchwork of AI laws, regulations, standards, and guidelines at national and international levels. This lack of harmonization of laws and regulations means that they are not in clear alignment, meaning they can be inconsistent in nature. This makes them both confusing and ineffective, making it difficult for stakeholders to comply with, and for regulators to supervise and enforce, especially across borders.

This lack of clear regulation and the lack of appropriate enforcement mechanisms makes it difficult to hold actors to account for their actions and can encourage non-compliance, violations, and serious misconduct leading to the potential unsafe, unethical, and illegal use of AI technology. The existence of algorithmic bias can result in a lack of fairness and lead to an exacerbation of existing inequality, prejudice, and discrimination. A major concern is that the current voluntary nature of AI compliance and an over reliance on self-regulation is not sufficient to address these potentially systemic issues.

4. Unreliable AI Intelligence

Unreliable intelligence can ultimately result in poor decision making in its many forms. Many AI algorithms can be opaque in nature and are often referred to in terms of a “Black Box,” which hinders the clarity and transparency of the development and deployment of AI systems. Their complexity makes it difficult to interpret or fully comprehend their algorithmic decision-making and other outputs.

It is therefore difficult for stakeholders to understand and mitigate their limitations, potential risks, and the existence of biases. This can further contribute to accountability gaps and make it difficult to hold AI developers and users accountable for their actions. AI development can also lack the necessary stakeholder engagement and public participation which can mean a lack of the required diversity of thought needed for the necessary alignment with social, moral, and ethical values. This lack of transparency and understanding can expose the AI industry to the threat of clandestine influence.

5. Inadequate AI Security

The global approach to AI security also appears to be somewhat disjointed. Data is one of the primary resources of the AI industry and AI systems collect and process vast amounts of data. AI technologies can be vulnerable to cyberattacks which can compromise assets (including sensitive data), disrupt operations, or even cause physical harm. If AI systems are not properly protected and secured, they could be infiltrated or hacked, resulting in unauthorized access to data and this could be used for malicious purposes such as data manipulation, identity theft, or fraud. This raises concerns about data breaches, data security, and personal privacy.

Indeed, AI powered malware could help malicious actors to evade existing cyber defenses thereby enabling them to inflict significant destruction to supply chains and critical infrastructure. Examples include damage to power grids, disruption of financial systems, and others.

6. Insufficient AI Resilience

The global approach to AI resilience is naturally impacted by the chaotic approach to some of the other areas noted above. Where AI systems are vulnerable to cyberattacks, this can allow hackers to disrupt operations leading to possible unforeseen circumstances which are difficult (if not impossible) to prepare for. This can impact on the reliability and robustness of the AI system and its ability to perform as intended in real-world conditions and to withstand, rebound, or recover from a shock, disturbance or disruption. AI systems can of course also make errors, incorrect diagnoses, faulty predictions, or other mistakes, sometimes termed “hallucinations.”

Where an AI system malfunctions or fails for whatever reason, this can lead to unintended consequences or safety hazards that could negatively impact on individuals, society, and the environment. This may be of particular concern in critical domains such as power, transportation, health, and finance.

7. Ineffective AI Controls

The global approach to AI controls also seems to be somewhat disorganized. Once AI systems are deployed, it can be difficult to change them. This can make it difficult to adapt to new circumstances or to correct mistakes. There are therefore some concerns that an overemphasis on automated technical controls (such as bias detection and mitigation) and not enough attention given to the importance of human control can create a false sense of security and mask the need for human control mechanisms.

As AI systems become more sophisticated, there is a real risk that humans will lose control over AI leading to situations where AI may make decisions that have unintended consequences that can significantly impact on individuals’ lives with potentially harmful consequences. Increasing the autonomy of AI systems without the appropriate safeguards and controls in place raises valid concerns about issues such as ethics, responsibility, accountability, and potential misuse.

8. Failures by AI Assurance Providers

There is currently no single, universally accepted framework or methodology for AI assurance. Different organizations and countries have varying approaches, leading to potential inconsistencies. The opaque nature and increasing complexity of AI can make it difficult to competently assess AI systems, creating gaps in assurance practices, and thus hindering the provision of comprehensive assurance.

The expertise required for effective AI assurance is often a scarce commodity and may be unevenly distributed which in turn can create accessibility challenges for disadvantaged areas and groups. The lack of transparency, ethical concerns, and the lack of comprehensive AI assurance can lead to an erosion of public trust and confidence in AI technologies which can hinder its adoption and potentially create resistance to its potential benefits. Given all of the above, the provision of AI assurance can be a potential minefield for assurance providers.

AI Value Destruction and Collateral Damage

Should any assurance provider worth their salt undertake to benchmark these eight critical AI defense components to a simple 5 step maturity model ( 1. Dispersed, 2. Centralized, 3. Global (Enterprise-wide), 4. Integrated, 5. Optimized) then each one of them individually and collectively would currently be rated as being only at step 1, Dispersed. This level of immaturity in itself represents a recipe for value destruction.

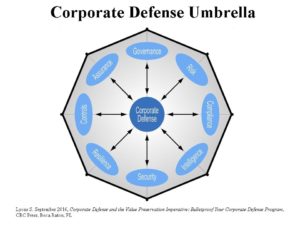

Each of these eight critical AI defense components are interconnected, intertwined, and interdependent as individually each impacts on, and is impacted by, each of the other components. They represent links in a chain where the chain is only as strong as its weakest link. Collectively they can provide an essential cross-referencing system of checks and balances which helps to preserve AI stakeholder value. Therefore, the existence of deficiencies and weaknesses in more than one of these critical components can collectively result in exponential collateral damage to stakeholder value.

Each of these eight critical AI defense components are interconnected, intertwined, and interdependent as individually each impacts on, and is impacted by, each of the other components. They represent links in a chain where the chain is only as strong as its weakest link. Collectively they can provide an essential cross-referencing system of checks and balances which helps to preserve AI stakeholder value. Therefore, the existence of deficiencies and weaknesses in more than one of these critical components can collectively result in exponential collateral damage to stakeholder value.

Examples of Potential Value Destruction

Misuse and Abuse:AI technologies can be misused and abused for all sorts of malicious purposes with potentially catastrophic results. They can be used for deception, to shape perceptions, or to spread propaganda. AI generated deepfake videos can be used to spread false or misleading information, or to damage reputations. Other sophisticated techniques could be used to spread misinformation and be used in targeted disinformation campaigns to manipulate public opinion, undermine democratic processes (elections and referendums) and destabilize social cohesion (polarization and radicalization).

Privacy, Criminality, and Discrimination: AI powered surveillance such as facial recognition can be intentionally used to invade people’s privacy. AI technologies can help in the exploitation of vulnerabilities in computer systems and can be applied for criminal purposes such as committing fraud or the theft of sensitive data (including intellectual property). They can be used for harmful purposes such as cyberattacks and to disrupt or damage critical infrastructure. In areas such as healthcare, employment, and the criminal justice system AI bias can lead to discrimination against certain groups of people based on their race, gender, or other protected characteristics. It could even create new forms of discrimination potentially undermining democratic freedoms and human rights.

Job Displacement and Societal Impact: As AI technologies (automobiles, drones, robotics, and others) become more sophisticated, they are increasingly capable of performing tasks that were once thought to require human workers. AI powered automation of tasks raises concerns relating to mass job displacement (typically the most vulnerable), and the potential for widespread unemployment which could impact on labor markets and social welfare, potentially leading to business upheaval, industry collapse, economic disruption, and social unrest. AI also has the potential to amplify and exacerbate existing power imbalances, economic disparities, and social inequalities.

Autonomous Weapons: AI controlled weapons systems could make decisions about when and who to target, or potentially make life-and-death decisions (and kill indiscriminately) without human intervention, raising concerns about ethical implications and potential unintended consequences. Indeed, the development and proliferation of autonomous weapons (including WMDs) and the competition among nations to deploy weapons with advanced AI capabilities raises fears of a new arms race and the increased risk of a nuclear war. This potential for misuse and possible unintended catastrophic consequences could ultimately pose a threat to international security, global safety, and ultimately humanity itself.

The Singularity: The ultimate threat potentially posed by the AI singularity or superintelligence is a complex and uncertain issue which may (or may not) still be on the distant horizon. The potential for AI to surpass human control and pose existential threats to humanity cannot and should not be dismissed and it is imperative that the appropriate safeguards and controls are in place to address this existential risk. The very possibility that AI could play a role in human extinction should at a minimum raise philosophical questions about our ongoing relationship with AI technology and our required duty of care. Existential threats cannot be ignored and addressing them cannot be deferred or postponed.

AI Value Preservation Imperative

Under the prevailing circumstances the occurrence of some or all of the above AI related hazards represent both an unacceptably high probability and impact, with potentially catastrophic outcomes for a large range of stakeholder groups. Serious stewardship, oversight, and regulation concerns have already been publicly expressed by AI experts, researchers, and backers. It represents an urgent issue which requires urgent action. This is one matter where a proactive approach is demanded, as we simply cannot accept a reactive approach to this challenge. In such a situation “prevention is much better than cure,” and it is certainly not a time to “Shut the barn door after the horse has bolted.”

Addressing this matter is by no means an easy task but it is one which needs to be viewed as a compulsory or mandatory obligation. Like many other challenges facing human beings on Planet Earth this is one that will require global engagement and a global solidarity of purpose.

AI value preservation requires a harmonization of global, international, and national frameworks, regulations, and practices to help ensure consistent implementation and the avoidance of fragmentation. This means greater coordination, knowledge sharing, and wider adoption in order to help ensure a robust and equitable global AI defense program.

This needs to begin with a much greater appreciation and understanding of the nature of AI value dynamics (creation, preservation, and destruction) in order to help foster responsible innovation. Sooner rather than later, the approach to due diligence needs to include adopting a holistic, multi-dimensional and systematic vision that involves an integrated, inter-disciplinary, and cross-functional approach to AI value preservation. Such an approach can help contribute to a more peaceful and secure world, by creating a more trustworthy, responsible, and beneficial AI ecosystem for all.

This pre-mortem simply cannot be allowed to develop into a post-mortem! ![]()

Sean Lyons is a value preservation & corporate defense author, pioneer, and thought leader. He is the author of “Corporate Defense and the Value Preservation Imperative: Bulletproof Your Corporate Defense Program.”