There is a common joke among physicists that fusion energy is 30 years away … and always will be. You could say something similar about artificial intelligence (AI) and robots taking all our jobs. The risks of AI and robotics have been expressed vividly in science fiction by the likes of Isaac Asimov as far back as 1942 and in news articles and industry reports pretty much every year since. “The machines are coming to take your jobs!” they proclaim. And yet, most of us internal auditors still head to the office or log in from home each weekday morning.

The reality is less striking but potentially just as worrying. Most people expect that one day some sort of machine will be built that will instantly know how to do a certain job—including internal auditing—and then those jobs will be gone forever. More likely, is that AI and smart systems start to permeate into everyday tasks that we perform at work and become critical parts of the business processes our units and companies conduct. (Indeed, many professions and industries have already been greatly disrupted by AI and robotics.)

Technology companies have been so successful over the last 30 years because of the common mantra of “move fast and break things.” And that was maybe just about acceptable when it meant you could connect online to your friend from high school and find out what they had for breakfast or search through the World Wide Web for exactly the right cat meme with a well-crafted string of words.

When the consequences now might mean entrenching biases in Human Resources processes, or mass automated biometric surveillance, not to mention simply not even understanding what a system is doing (so called ‘black boxes’), the levels of oversight and risk management need to be much higher.

The Regulatory Environment

There is some existing regulation which covers aspects of this brave new world. For example, in the European Union, article 22 of the General Data Protection Regulation (GDPR) on automated individual decision-making, provides protection against an algorithm being solely responsible for something like deciding whether a customer is eligible for a loan or mortgage. However, the next big thing coming to a company near EU is the AI Act.

See Also, “Using Artificial Intelligence in Internal Audit: The Future is Now.”

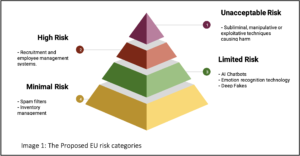

The proposal aims to make the rules governing the use of AI consistent across the EU. The current wording is written in the style of the GDPR with prescriptive requirements, extraterritorial reach, a risk-based approach, and heavy penalties for infringements. With the objective of bringing about a “Brussels effect,” where regulation in the EU influences the rest of the world.

Other western jurisdictions are taking a lighter touch than the EU, with the United Kingdom working on a “pro-innovation approach to regulating AI,” and the United States’ recent “Blueprint for an AI Bill of Rights” moving towards a non-binding framework. Both have principles which closely match the proposed legal obligations within the AI Act, hinting at the impact the regulation is already having.

Other western jurisdictions are taking a lighter touch than the EU, with the United Kingdom working on a “pro-innovation approach to regulating AI,” and the United States’ recent “Blueprint for an AI Bill of Rights” moving towards a non-binding framework. Both have principles which closely match the proposed legal obligations within the AI Act, hinting at the impact the regulation is already having.

Much of the draft regulation is still being discussed, with a final wording soon to be agreed. There are disagreements across industries and countries on whether some of the text goes far enough or goes too far. For example, whether the definition of “AI” should be narrowed, as the current wording could encompass simple rules-based decision-making tools (or even potentially Excel macros) or even expanded to greater capture so-called “general purpose AI.” These are large models which can be used for various different tasks and therefore, applying the prescriptive requirements and risk-based approach of the AI Act can become complex and laborious.

The uncertainty over the final wording has given companies an excuse to not make first moves to prepare for the changes. Anyone who remembers the mad rush to become compliant with the GDPR will remember the pain of leaving these things to the last minute. The potential fines, which may be as high as 6 percent of annual revenue depending on the final wording, could be crippling and have a cascade effect on a company’s going-concern.

What Can Internal Auditors Do?

As internal audit professionals we can start the conversation with the business and other risk and compliance departments to shine the light on the risks and upcoming regulations which they may be unaware of. It is our objective to provide assurance but also add value to the company and this can be done through our unique ability to understand risks, the business, and provide horizon scanning activities.

Performing internal audit advisory or assurance work, depending on the AI risk maturity level at the organization, can highlight the good practice risk management steps that can be taken early to help when the regulation is finalized. These steps could include:

1) Identify AI in Use: To be able to appropriately manage AI risks throughout their lifecycle stakeholders need to be able to identify systems and processes which make use of them. Agreeing on a definition of AI and developing a process to identify where it is in use is the first step. This would include whether it is being developed in-house, is already in use through existing tools or services, or acquired through the procurement process.

2) Inventory: Developing an inventory which includes information such as the intended purpose, data sources used, design specifications, and assumptions on how and what monitoring will be performed is a good starting point and can be added to, based on your company’s unique characteristics and any specific legal requirements that are implemented in the future.

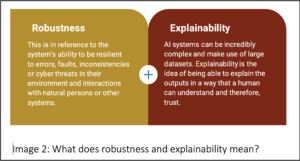

3) Risk Assessments: Since a key aspect of the AI Act is it being “risk-based,” it is important to have a risk assessment process to ensure you take the necessary steps as required in the regulation, based on the type of AI used. For example, what level of robustness, explainability, and user documentation is necessary based on the risk tier provided. It is also important to consider the business and technology risks of using the AI. For example, machine learning using neural networks requires large training datasets, which can raise issues of data protection and security, but may also perpetuate biases that are contained in the datasets. Suitable experts and stakeholders should be involved in the development and assessment of the risk assessment process.

3) Risk Assessments: Since a key aspect of the AI Act is it being “risk-based,” it is important to have a risk assessment process to ensure you take the necessary steps as required in the regulation, based on the type of AI used. For example, what level of robustness, explainability, and user documentation is necessary based on the risk tier provided. It is also important to consider the business and technology risks of using the AI. For example, machine learning using neural networks requires large training datasets, which can raise issues of data protection and security, but may also perpetuate biases that are contained in the datasets. Suitable experts and stakeholders should be involved in the development and assessment of the risk assessment process.

4) Communications: One area that is often forgotten is communication. It is all well and good having a policy or a framework written down but if it isn’t known and understood by the relevant stakeholders it’s worth less than the paper it’s printed on. Involving key stakeholders during the development of your AI risk management processes can help develop a diverse platform of champions throughout the business who can act as enablers as the requirements are communicated and regulation finalized.

5) On-going monitoring: Risk management is not a one-off exercise and this is no exception. Use cases, technology, and the threat landscape change over time and it is important to include a process for on-going monitoring of AI and the associated risks.

The machines may not be coming to take our jobs just yet, but the risks are already here and so are the opportunities to get ahead. There may be a long and winding road in front, as we all prepare for a world where AI is commonplace and new regulations and standards try to shape its use, but each journey starts with a step and it’s never too early to get going. ![]()

Richard Clapton is an Internal Auditor for an international technology and automation company based in the United Kingdom.

AI Risk Management is definitely a topic I don’t think is discussed enough! Particularly with the arms-race to deploy LLMs to business applications, there are serious security concerns with using black-box technology in a user-facing manner, and the jury is still out on the legal ramifications of AI illegally trained on company data (NYT is suing OpenAI for use of copyrighted work for training its LLMs).